English to Chinese Speech Translator using Python Flask

In of my recent keynote address in Taipei, I spoke about solving pain points by coding with the help of generative AI.

A night before the talk, I figured that I can create an English to Chinese translator app to provide real time translation to my mainly Chinese speaking audience. I am sure there are something in the market that does the job, but my point is about tinkering and solving pain points (and alleviating the itch to create!).

Thanks to generative AI, a simple tool was born, and I placed it alongside my slides. It picked up my speech through the microphone on my notebook and translated it to Chinese text. You can test it here (while it is still there, before my next tinkering overrides the deployment): https://app-keefellow.pythonanywhere.com/

Sharing the code below for posterity.

The 4 key steps taken in this English to Chinese speech translation app is as follows:

Speech Recognition Setup: The app uses the Web Speech API to continuously listen for English speech input, displaying both interim and final results in real-time.

Translation Processing: When final speech results are detected, they're sent to a Flask backend endpoint ('/translate') which uses Google Translate API to convert English text to Chinese.

Server Configuration: The Flask server handles the translation requests and serves the web interface, with routes for the main page, output page, and the translation API endpoint.

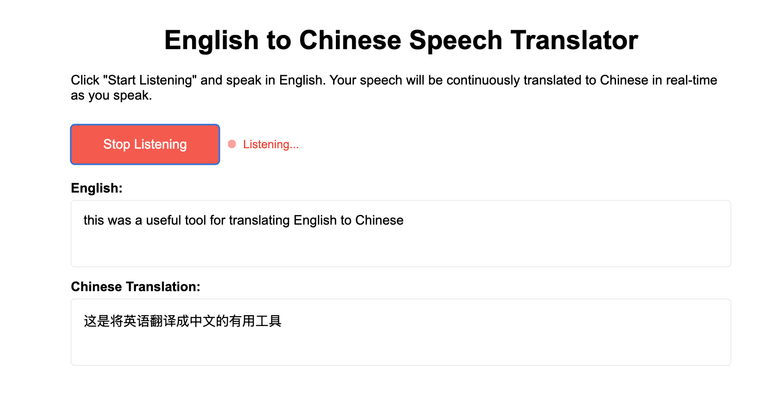

User Interface Display: The app presents a clean interface with a "Start/Stop Listening" button and two text boxes that show the original English speech input and its Chinese translation in real-time.

Love to hear ideas from you to see how this can customised.

from flask import Flask, render_template, request, jsonify

import os

import requests

import json

app = Flask(__name__, static_folder='static')

# Translate text using Google Translate API

def translate_text(text, target_lang='zh-CN'):

try:

base_url = "https://translate.googleapis.com/translate_a/single"

params = {

"client": "gtx",

"sl": "en",

"tl": target_lang,

"dt": "t",

"q": text

}

response = requests.get(base_url, params=params)

if response.status_code == 200:

try:

result = response.json()

translated_text = ''

for sentence in result[0]:

if sentence[0]:

translated_text += sentence[0]

return translated_text

except json.JSONDecodeError:

# Handle case when response is not valid JSON

return f"Translation Error: Invalid response format"

else:

return f"Translation Error: {response.status_code}"

except Exception as e:

return f"Translation Error: {str(e)}"

@app.route('/')

def index():

return render_template('index.html')

@app.route('/output')

def output():

return render_template('output.html')

@app.route('/translate', methods=['POST'])

def translate():

try:

data = request.get_json()

if not data or 'text' not in data:

return jsonify({'error': 'No text provided'}), 400

english_text = data['text']

# Translate to Chinese

chinese_text = translate_text(english_text)

result = {

'english_text': english_text,

'chinese_text': chinese_text

}

return jsonify(result)

except Exception as e:

return jsonify({'error': str(e)}), 500

if __name__ == '__main__':

# Create directories if they don't exist

os.makedirs('templates', exist_ok=True)

os.makedirs('static', exist_ok=True)

# Create templates

with open('templates/index.html', 'w') as f:

f.write('''

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Speech Recording</title>

<style>

body {

font-family: Arial, sans-serif;

max-width: 800px;

margin: 0 auto;

padding: 20px;

}

h1 {

text-align: center;

}

.container {

margin-top: 20px;

}

.record-btn {

background-color: #4CAF50;

border: none;

color: white;

padding: 15px 30px;

text-align: center;

text-decoration: none;

display: inline-block;

font-size: 16px;

margin: 10px 0;

cursor: pointer;

border-radius: 5px;

width: 180px;

}

.record-btn.recording {

background-color: #f44336;

animation: pulse 1.5s infinite;

}

@keyframes pulse {

0% { opacity: 1; }

50% { opacity: 0.7; }

100% { opacity: 1; }

}

.result-box {

margin-top: 20px;

padding: 15px;

border: 1px solid #ddd;

border-radius: 5px;

min-height: 100px;

}

.transcript-area {

width: 100%;

min-height: 100px;

padding: 10px;

margin-bottom: 15px;

font-size: 16px;

border: 1px solid #ddd;

border-radius: 5px;

}

.translate-btn {

background-color: #2196F3;

border: none;

color: white;

padding: 10px 20px;

text-align: center;

display: inline-block;

font-size: 16px;

margin: 5px 0 15px;

cursor: pointer;

border-radius: 5px;

}

.status-indicator {

color: #666;

font-style: italic;

margin: 10px 0;

}

.text-container {

display: flex;

flex-direction: column;

margin-bottom: 20px;

}

.text-box {

border: 1px solid #ddd;

border-radius: 5px;

padding: 15px;

margin-bottom: 15px;

min-height: 50px;

}

.text-label {

font-weight: bold;

margin-bottom: 5px;

}

.listening-indicator {

display: inline-block;

margin-left: 10px;

font-size: 14px;

color: #f44336;

}

.listening-dot {

display: inline-block;

width: 10px;

height: 10px;

background-color: #f44336;

border-radius: 50%;

margin-right: 5px;

animation: blink 1s infinite;

}

@keyframes blink {

0% { opacity: 1; }

50% { opacity: 0.2; }

100% { opacity: 1; }

}

</style>

</head>

<body>

<h1>English to Chinese Speech Translator</h1>

<div class="container">

<p>

Click "Start Listening" and speak in English. Your speech will be continuously

translated to Chinese in real-time as you speak.

</p>

<div style="display: flex; align-items: center;">

<button id="recordBtn" class="record-btn">Start Listening</button>

<div id="listeningIndicator" style="display: none;" class="listening-indicator">

<span class="listening-dot"></span> Listening...

</div>

</div>

<p id="recognitionStatus" class="status-indicator"></p>

<div class="text-container">

<div>

<div class="text-label">English:</div>

<div id="englishText" class="text-box"></div>

</div>

<div>

<div class="text-label">Chinese Translation:</div>

<div id="chineseText" class="text-box"></div>

</div>

</div>

</div>

<script>

const recordBtn = document.getElementById('recordBtn');

const englishText = document.getElementById('englishText');

const chineseText = document.getElementById('chineseText');

const recognitionStatus = document.getElementById('recognitionStatus');

const listeningIndicator = document.getElementById('listeningIndicator');

let recognition;

let isListening = false;

let translationDebounceTimer;

// Initialize Web Speech API

function initSpeechRecognition() {

if ('webkitSpeechRecognition' in window) {

recognition = new webkitSpeechRecognition();

} else if ('SpeechRecognition' in window) {

recognition = new SpeechRecognition();

} else {

alert('Your browser does not support speech recognition. Try Chrome or Edge.');

return false;

}

recognition.continuous = true;

recognition.interimResults = true;

recognition.lang = 'en-US';

recognition.onstart = function() {

isListening = true;

recordBtn.textContent = 'Stop Listening';

recordBtn.classList.add('recording');

listeningIndicator.style.display = 'inline-block';

recognitionStatus.textContent = '';

// Reset for new session

currentSession = '';

lastFinalResult = '';

};

recognition.onend = function() {

if (isListening) {

// If we're still supposed to be listening, restart recognition

// This helps with the 60-second limit some browsers have

try {

recognition.start();

} catch (e) {

console.error('Failed to restart recognition:', e);

stopListening();

}

} else {

stopListening();

}

};

recognition.onerror = function(event) {

console.error('Speech recognition error', event.error);

if (event.error === 'no-speech') {

// This is a common error that happens when no speech is detected

// We don't need to show this to the user or stop listening

return;

}

recognitionStatus.textContent = 'Error: ' + event.error;

if (event.error === 'network' || event.error === 'service-not-allowed') {

stopListening();

}

};

let currentSession = '';

let lastFinalResult = '';

recognition.onresult = function(event) {

let interimTranscript = '';

let finalTranscript = '';

// Process all results from this session

for (let i = 0; i < event.results.length; ++i) {

const transcript = event.results[i][0].transcript;

if (event.results[i].isFinal) {

// Store the latest final result

if (i >= event.resultIndex) {

lastFinalResult = transcript;

// Send only new final results for translation

translateText(transcript);

}

} else if (i >= event.resultIndex) {

// Only add interim results from the current recognition

interimTranscript += transcript;

}

}

// Display the text - prioritize showing interim results

if (interimTranscript) {

englishText.innerHTML = '<span style="color: #999;">' + interimTranscript + '</span>';

} else if (lastFinalResult) {

englishText.innerHTML = lastFinalResult;

}

};

// When recognition restarts, reset the tracking variables

recognition.onstart = function() {

isListening = true;

recordBtn.textContent = 'Stop Listening';

recordBtn.classList.add('recording');

listeningIndicator.style.display = 'inline-block';

recognitionStatus.textContent = '';

// Reset for new session

currentSession = '';

lastFinalResult = '';

};

return true;

}

function stopListening() {

isListening = false;

if (recognition) {

try {

recognition.stop();

} catch (e) {

console.error('Error stopping recognition:', e);

}

}

recordBtn.textContent = 'Start Listening';

recordBtn.classList.remove('recording');

listeningIndicator.style.display = 'none';

}

// Toggle listening

recordBtn.addEventListener('click', function() {

if (!recognition && !initSpeechRecognition()) {

return;

}

if (isListening) {

stopListening();

} else {

// Clear previous text when starting a new session

englishText.innerHTML = '';

try {

recognition.start();

} catch (e) {

console.error('Error starting recognition:', e);

alert('Could not start speech recognition. Please refresh the page and try again.');

}

}

});

// Translate text using the Flask API

async function translateText(text) {

try {

if (!text || text.trim() === '') return;

chineseText.innerHTML = '<em>Translating...</em>';

const response = await fetch('/translate', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({ text: text })

});

if (!response.ok) {

throw new Error(`Server responded with ${response.status}`);

}

const data = await response.json();

if (data.error) {

chineseText.innerHTML = '<em>Error: ' + data.error + '</em>';

} else {

chineseText.innerHTML = data.chinese_text;

}

} catch (error) {

console.error('Error:', error);

chineseText.innerHTML = '<em>Error: ' + error.message + '</em>';

}

}

// Initialize the page

document.addEventListener('DOMContentLoaded', function() {

// Try to initialize speech recognition

initSpeechRecognition();

});

</script>

</body>

</html>

''')

app.run(debug=True)

Congratulations @keeideas! You have completed the following achievement on the Hive blockchain And have been rewarded with New badge(s)

Your next target is to reach 30 posts.

You can view your badges on your board and compare yourself to others in the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOP